Ati ethical and legal considerations – Emerging artificial intelligence technology (ATI) raises profound ethical and legal considerations that shape its development and deployment. This discourse explores the potential ethical implications, legal frameworks, and societal impact of ATI, providing insights for responsible and equitable implementation.

As ATI advances, it presents a myriad of ethical dilemmas and legal challenges that require careful navigation. Understanding these considerations is paramount for stakeholders involved in the design, implementation, and regulation of ATI.

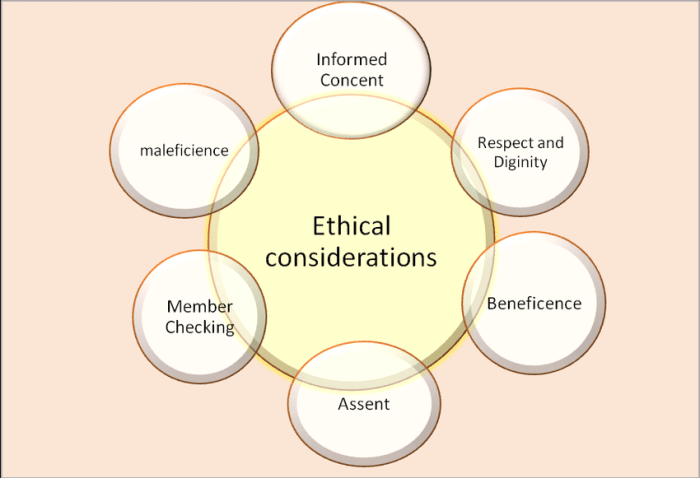

Ethical Considerations

The use of Artificial Intelligence (AI) in medical imaging, particularly in the context of Automated Tumor Identification (ATI), presents several ethical implications that require careful consideration.

One of the primary ethical concerns with ATI is the potential for bias and discrimination. AI algorithms are trained on large datasets, and if these datasets are not representative of the population, the algorithm may learn biased patterns that can lead to unfair or inaccurate results.

Transparency and Accountability

Transparency and accountability are crucial in the use of ATI. It is essential to ensure that the algorithms used are transparent and explainable, so that healthcare professionals can understand how they work and make informed decisions about their use.

Furthermore, there should be clear mechanisms for accountability when AI systems make mistakes or cause harm. This includes establishing clear lines of responsibility and ensuring that appropriate measures are taken to address any negative consequences.

Legal Considerations

The development and deployment of Artificial Intelligence Technologies (ATI) raise various legal concerns that need to be carefully considered. These concerns stem from the potential impact of ATI on individuals, organizations, and society as a whole. To address these concerns, it is essential to identify the legal frameworks that apply to ATI and understand the potential legal liability associated with its use.

The legal frameworks that apply to ATI vary depending on the jurisdiction in which it is being developed and deployed. However, some common legal frameworks that may be relevant include:

- Data protection and privacy laws

- Intellectual property laws

- Product liability laws

- Competition laws

li>Employment laws

These legal frameworks impose various obligations on those who develop and deploy ATI. For example, data protection and privacy laws may require that personal data collected by ATI is processed in a fair and transparent manner, and that individuals have the right to access and control their personal data.

Intellectual property laws may protect the intellectual property rights of those who develop ATI, while product liability laws may impose liability on those who manufacture or sell ATI products that cause harm to users.

Potential Legal Liability

The use of ATI may also give rise to potential legal liability. For example, if an ATI system makes a decision that results in harm to an individual or organization, the developers and deployers of the system may be held liable for negligence or other torts.

Similarly, if an ATI system is used to discriminate against individuals on the basis of race, gender, or other protected characteristics, the developers and deployers of the system may be held liable for discrimination.

It is important to note that the legal liability associated with ATI is still evolving. As ATI becomes more widespread and sophisticated, it is likely that new legal issues will arise. It is therefore important for those who develop and deploy ATI to be aware of the potential legal risks and to take steps to mitigate these risks.

Recommendations

There are a number of recommendations that can be made to address legal concerns related to ATI. These recommendations include:

- Developing clear and comprehensive legal frameworks for ATI

- Providing guidance to those who develop and deploy ATI on how to comply with legal requirements

- Establishing mechanisms for resolving disputes related to ATI

- Educating the public about the legal implications of ATI

By taking these steps, it is possible to mitigate the legal risks associated with ATI and to ensure that it is used in a responsible and ethical manner.

Data Privacy and Security: Ati Ethical And Legal Considerations

In the context of ATI, data privacy and security are of utmost importance due to the sensitive nature of the information being processed. Data privacy refers to the protection of personal and sensitive information from unauthorized access, use, or disclosure.

Data security involves safeguarding data from unauthorized modification, destruction, or loss.

Best practices for protecting data privacy and security in ATI systems include:

- Implementing strong encryption measures to protect data both at rest and in transit.

- Enforcing access controls to restrict access to data only to authorized individuals.

- Regularly monitoring and auditing ATI systems for security breaches or vulnerabilities.

- Educating users on data privacy and security best practices.

Potential Risks to Data Privacy and Security

ATI systems pose potential risks to data privacy and security, including:

- Unauthorized access to data by malicious actors.

- Data breaches due to system vulnerabilities or human error.

- Data loss or corruption due to hardware failures or natural disasters.

To mitigate these risks, it is crucial to implement robust data privacy and security measures and regularly assess and update security protocols to ensure the protection of sensitive information.

Bias and Fairness

Artificial intelligence (AI) systems, including automated testing infrastructure (ATI), have the potential to introduce bias and unfairness. Bias can arise from various sources, such as the data used to train the AI model, the algorithms employed, and the human biases that may be embedded in the system design or implementation.

Unfairness can result when biased AI systems make decisions that disproportionately affect certain groups of people, leading to disparate outcomes or discrimination.

Examples of Bias and Unfairness in ATI

- Data Bias:ATI systems trained on biased data may inherit and amplify those biases, leading to unfair outcomes. For instance, an ATI system trained on historical data that reflects gender or racial disparities may perpetuate those disparities in its automated testing results.

- Algorithmic Bias:The algorithms used in ATI systems can introduce bias if they are not designed to handle diverse data fairly. For example, an algorithm that relies on a single threshold for pass/fail criteria may be biased against certain groups if their performance distribution differs significantly from the majority.

- Human Bias:Human biases can be introduced into ATI systems through the choices made by developers, testers, and other stakeholders. For instance, if a tester is biased towards a particular type of test case, they may prioritize those cases in the ATI system, leading to an unfair representation of the overall testing needs.

Addressing bias and fairness in ATI is crucial to ensure the ethical and responsible development and deployment of these systems. By mitigating bias and promoting fairness, ATI can contribute to more equitable and just outcomes in software testing and beyond.

Societal Impact

Artificial intelligence technologies (ATI) have the potential to bring about significant societal change. They can be used to address some of the world’s most pressing challenges, such as climate change, poverty, and disease. However, it is important to be aware of the potential negative consequences of ATI and to take steps to mitigate them.

Benefits of ATI

- ATI can help us to improve our understanding of the world around us. By collecting and analyzing vast amounts of data, ATI can help us to identify patterns and trends that would be difficult or impossible to find on our own.

This information can be used to make better decisions about how to address social and environmental problems.

- ATI can help us to automate tasks that are currently done by humans. This can free up our time to focus on more creative and fulfilling activities. It can also lead to increased productivity and economic growth.

- ATI can help us to create new products and services that would not be possible without it. For example, ATI is being used to develop new medical treatments, self-driving cars, and even artificial intelligence-powered personal assistants.

Potential Negative Consequences of ATI

- ATI could lead to job losses as machines become more capable of performing tasks that are currently done by humans. This could have a significant impact on the economy and on the lives of workers who are displaced by automation.

- ATI could be used to create autonomous weapons systems that could be used to wage war without human intervention. This could lead to a new arms race and increase the risk of conflict.

- ATI could be used to create surveillance systems that could be used to track and monitor people’s activities. This could lead to a loss of privacy and freedom.

Mitigating the Negative Consequences of ATI

It is important to be aware of the potential negative consequences of ATI and to take steps to mitigate them. Some of the ways that we can do this include:

- Investing in education and training programs to help workers adapt to the changing economy.

- Developing ethical guidelines for the development and use of ATI.

- Creating regulations to protect people’s privacy and freedom.

By taking these steps, we can help to ensure that ATI is used for good and not for evil.

Policy Recommendations

To address the ethical, legal, and societal considerations associated with ATI, it is imperative to establish robust policies that provide clear guidance and frameworks for its responsible use. These policies should be developed with input from a diverse range of stakeholders, including researchers, policymakers, industry leaders, and representatives from affected communities.

Existing policies may have gaps that need to be addressed. For instance, there may be a lack of clarity regarding data ownership, consent, and the use of sensitive data. By identifying these gaps and proposing recommendations for their resolution, we can strengthen the ethical and legal framework surrounding ATI.

Stakeholder Engagement, Ati ethical and legal considerations

Engaging stakeholders in policy development is crucial for ensuring that diverse perspectives are considered and that the policies are responsive to the needs of all affected parties. This includes involving researchers who use ATI, policymakers who regulate its use, industry leaders who develop and deploy ATI systems, and representatives from communities that may be impacted by its use.

By fostering a collaborative and inclusive process, we can develop policies that are both effective and widely supported.

Addressing Gaps in Existing Policies

To address gaps in existing policies, it is necessary to identify the specific areas where the policies fall short. This may involve conducting a comprehensive review of current policies, seeking feedback from stakeholders, and analyzing case studies that highlight the limitations of existing frameworks.

Once the gaps have been identified, specific recommendations can be developed to address them. For example, if a policy does not adequately address the issue of data ownership, a recommendation could be made to clarify the rights and responsibilities of data owners and users.

FAQ Insights

What are the key ethical concerns associated with ATI?

Potential ethical implications include bias, transparency, accountability, and the impact on human values and decision-making.

What legal frameworks govern the development and deployment of ATI?

Applicable legal frameworks vary by jurisdiction but may include data protection laws, intellectual property laws, and regulations on AI-specific applications.

How can we address bias and fairness in ATI systems?

Mitigating bias requires diverse training data, transparent algorithms, and ongoing monitoring to identify and address potential biases.